Tutorial 2 - Understanding fMRI Statistics, especially the General Linear Model (GLM)

Goals

To understand how statistics, even simple statistics such as correlations, can be employed on a voxelwise basis to analyze fMRI data

To develop an intuitive understanding of the how the General Linear Model (GLM) is used for univariate, single-subject, single-run fMRI data analysis

To understand the significance of correlation coefficients, beta weights and p values in time course analyses

To learn how to use contrasts in statistical analyses

Relevant Lectures

Lecture 03a: fMRI statistics: Correlation

Lecture 03b: fMRI statistics: Using the General Linear Model (GLM) to do a correlation

Lecture 03c: fMRI statistics: Extending the GLM to more conditions and multiple runs

Accompanying Data

Background and Overview

This lecture assumes that you have an understanding of basic statistics. If you have taken a stats course in Psychology and worked with behavioral data, many of the statistical tests that can be employed for fMRI will be familiar to you — correlations, t tests, analyses of variance (ANOVAs) and so forth. These tests can be applied to fMRI data as well, using similar logic. As one example, you can compute a correlation between an expected time course and the actual time course from a voxel (or a cluster of voxels in a specific brain region) to see how well your expectations were met. As another example, you can extract an estimate of how active the voxel or region was in different conditions and then simply perform a t test to examine whether activation levels differ between two conditions. Alternatively, if you have a factorial design, such as our Main Experiment, which tested Faces/Hands Categories x Left/Centre/Right Directions (a 2 x 3 design), you can run an ANOVA to look for main effects and interactions.

All of these common statistical tests can be applied through a General Linear Model (GLM). The GLM is the "swiss army knife" of statistics, allowing you to accomplish many different goals with one tool.

Any statistical test needs two pieces of information:

- signal: how much variance in the data can be explained by a model (e.g., a correlation, a difference between two conditions in a t test, or a difference between a combination of conditions in a factorial design that is analyzed by an ANOVA)

- noise: how much variance remains unexplained

Statistical significance -- the degree of confidence that an effect is "real" depends on the ratio of explained variance to total variance (explained + unexplained variance). Put simply, if you have a big difference between conditions and little variance within each condition, you can be quite confident there is a legitimate difference; whereas, if you have a small effect in a very noisy data set, you would have little confidence that there was really a difference.

For fMRI data, there are two levels at which we can compute variance.

- Variability over time. If we only have data from one participant, we can look at how the strength of a difference in activation relative to the variability of the time course in a given voxel or region. This temporal variability can be parsed into effects due to the expected changes between conditions vs. noise. In this case, data points are time points.

- Variability over participants. If we have data from multiple participants, we can look at the average size of an activation difference across participants relative to the variability among participants. In this case, data points are participants.

In this tutorial, we will examine the first case -- how statistics can be used in the data from a single participant to estimate effect sizes relative to variability over time. We will begin with a Pearson correlation, which is one of the simplest and most straightforward statistical tests to understand. Then we will see how the GLM can be used to perform a correlation and also to generate more complex models that better explain the data.

In a later tutorial, we will learn how to employ the second approach, in which we can combine data between participants and look at the consistency of effects across individuals.

Understanding fMRI statistics for data over time

Time Series: Data, Signal, and Noise

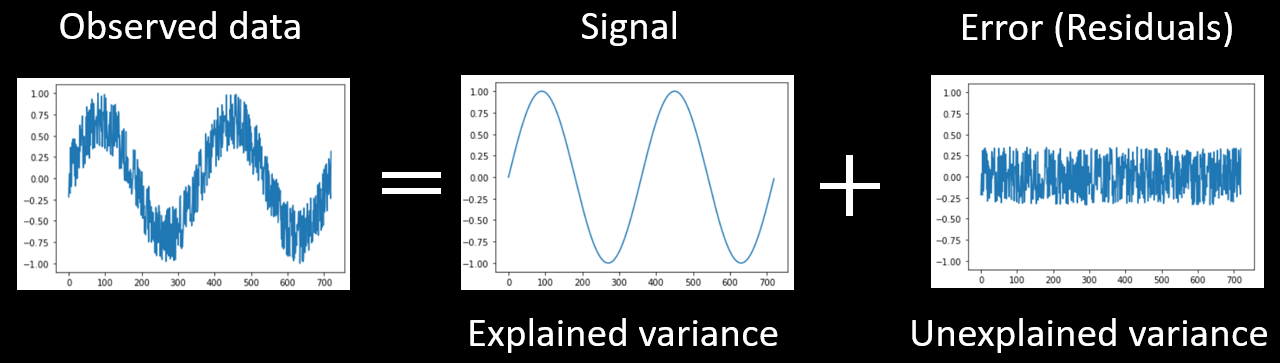

Let's begin by looking at how we can statistically analyze data that changes over time. A time course is shown below. This time course depict fMRI activation over time... or it could be any kind of time series (e.g., daily temperature over a two-year period).

We might be able to explain some of this variability with a model that captures much of the variability in the data, here a sinusoidal model of changes over time. The variance that can be accounted for by the model is the signal. However, the model can not explain all of the data. The error or noise (the part of the variance we cannot explain/model) can be seen in the residuals, which are computed by subtracting the model from the data.

These three parts are depicted in Figure 1 below.

Figure 2-1. Any data (such as a time series) can be decomposed into explained and unexplained variance.

Applying correlations to time-series data

In order to determine whether a voxel is reliably reacts to a certain stimulus, we must first measure how reliabily it changes with that stimulus/condition. To do this, we can use correlations to asses how closely the pattern of a predictor is related to the time course. A high correlation between a predictor and a timecourse indicates that the voxel's or region's activity is highly related to the type of stimuli presented, while a low correlation indicates that the voxel's or region's activity is not related to that specific type of stimuli.

Another way of thinking about it is in terms of signal-to-noise ratio . One way of quantifying this is calculating the percent of explained variance (explained variance/total variance). This perentage can easily be calculated by squaring the correlation coefficient(r2). A high signal to noise ratio(lots of signal with little noise) will have greater percent of explained variance, while a lower signal to noise ratio (little signal with lots of noise) will result in a smaller percent of explained variance.

Let's examine a few simple cases.

Activity #1

Open Widget 1 in a new window by clicking on the plot. (Note: if you have trouble opening the animation, please see our troubleshooting page).

Widget 1 shows a simulated 'data' time course in grey and a single predictor in purple using arbitrary units (y axis) over time in seconds (x axis). The predictor can be multiplied by a beta weight (scaling factor) and a constant can be added using the sliders (more will be said on these two parameters later in the tutorial). Below the time course is the residual time course, which we obtain by subtracting the model -- that is the scaled predictor -- from our data at each point in time (i.e., data - predictor = residuals).

Adjust the sliders for Voxel 1 so that the purple line (predictor) overlaps the black line (signal).

Question 1:

a) What is the value of the correlation coefficient? What does this value mean? Hint: consider the pattern (shapes) of the purple and black lines.

b) From past statistics courses, you are likely used to seeing correlations depicted as scatterplots. What would the scatterplot and line of best fit look like for this data? -- sketch it roughly.

c) What percentage of the total variance does the current model (purple predictor) explain? What percentage of the total variance would the best model account for?

d) Does the correlation coefficient change when moving the sliders (i.e., changing the beta weights)? Why or why not?

In practice, we don't expect to find a perfect fit between predictors and data. Voxel 2 is a more realistic example of noisy data.

Select Voxel 2 and adjust the sliders until you find the beta weight and constant that best fit the data.

Question 2:

a) What is the new correlation coefficient? Why is it different from Voxel 1?

b) What would the scatterplot look like now?

c) What percentage of the total variance does the current model(purple predictor) explain for Voxel 2? What can we say about the difference in signal to noise ratio between Voxel 1 and Voxel 2?

Applying correlations to real fMRI data

Now let's look at some real time courses by considering the simplest possible example: one run from one participant with two conditions. Note that we always need at least two conditions so that we can examine differences between conditions using subtraction logic. This is because measures of the BOLD signal in a single condition are affected not only by neural activation levels but also by extraneous physical factors (e.g., the coil sensitivity profile) and biological factors (e.g., the vascularization of an area). As such, we always need to compare conditions to look at neural activation (indirectly) while subtracting out other factors.

In this case, we will look at the first localizer run. Recall that this run is composed of four visual conditions (faces, hands, bodies and scrambled) and 1 baseline condition. For now, we will group the four visual conditions into one visual condition, leaving us with only two conditions (visual stimulation vs baseline).

Activity #2

Open the widget in a new window and adjust the sliders to fit the visual predictor (in pink) to Voxel A's time course as closely as possible.

Question 3:

a) How many data points are there in total? How many degrees of freedom (df) does the model require? Conceptually, what does this/do these df correspond to? How many df are assigned to the residuals?

b) What is the correlation coefficient for Voxel A? How would you interpret this coefficient in terms of strength of the relationship and the voxel's response to visual stimulation? What is the percentage of explained variance?

c) How much variance does the model account for Voxels A, B, C and D. What is the most obvious factor that hinders the fit of the model for Voxel B?

d) Voxel D has a correlation of r = -0.38. How do you interpret a negative correlation for fMRI data?

e) Voxels E and F have interpretable time courses but the correlations are not very high. Why?

What about p values?

There is always a chance that a certain pattern of data could occur randomly. For example, if you flip a normal coin 10X in a row, there is a small but non-zero chance -- p = 0.510 = .001 = 1/1000 = 0.1% -- that heads could occur on every flip. If we were trying to determine whether a coin was a magical "heads-only coin", getting 10 sequential heads outcomes would lead us to be fairly confident that it was. Of course, we would be less confident if we only got three heads outcomes, in which case the likelihood due to chance would be p = 0.53 = .125 = 1/8 = 12.5%. And we would be more confident if we got 20 sequential heads outcomes, in which case the likelihood due to chance would be p = 0.510 = .000001 = 1/1,000,000. Thus as you can see, one factor that reduces the likelihood of chance findings is the amount of data acquired. Generally researchers are willing to believe any outcome that has a likelihood of arising due to chance of p < .05 = 5%. Such results are deemed statistically significant.

The p value is the probability of obtaining a given result purely due to chance, as estimated based on how noisy the data are. Because the correlation estimates how much of the variability in the data is explained, higher r values will be associated with lower p values.

Question 4:

a) Using your answers from Question 3 above along with the "p from r" section of this online statistical calculator, determine whether or not the correlations for Voxels A, B, C and D are statistically significant (when no correction for multiple comparisons is applied).

b) Is a p value always associated to the same correlation coefficient? Why or why not?

c) If we use a threshold of p < .05 and examine the correlation between a predictor and a time course for 100 different voxels (basically, we calculated 100 correlations), how many voxels might be statistically significant due to chance? If we used a threshold of p < .01? What type of error (I or II) increases with the number of statistical tests?

Modelling the hemodynamic response

Recall that fMRI is an indirect mesure of neural activity. The expected neural activation is often modeled using a box car function. We then use a mathematical procedure called convolution to model the expected BOLD response based on the known relationship between neuronal activity and BOLD -- the hemodynamic response function (HRF) .

Let's first understand why having an accurate model of BOLD, based on the HRF, is valuable.

Using Widget 3, find the best parameters for the neural (box car) model and note the correlation and error term (squared error).

Then press on the convolve button to transform the boxcar function into the HRF. Again find the best parameters for the BOLD (convolved) model and note the correlation and error term (squared error).

Question 5:

a) Based on shape of the BOLD predictor, which type of Hemodynamic Response Function was employed in the convolution?

b) How does the convolution affect the quality of the model fit? What would be the consequences of using an HRF that did not match the participant's actual hemodynamics well (for example, if you used the standard model on a participant who had a much larger post-stimulus undershoot than most)?

II. Whole-brain Voxelwise Correlations in BrainVoyager

In the previous sections, we learned how to quantify the strength of the relationship between a predictor and a time course for a single voxel using correlation coefficients and p-values. However, we have 194,000 resampled functional voxels inside the brain volume. How can we get the bigger picture?

One way is to do a "Voxelwise" analysis, meaning that we run not just one correlation but one correlation for every voxel. This is sometimes also called a "whole-brain" analysis because typically the scanned volume encompasses the entire brain (although this is not necessarily the case, for example if a researcher scanned only part of the brain at high spatial resolution).

Instructions

1) Load the anatomical volume and the functional data. This procedure is explained in tutorial 1, but will be reminded for you here:

- Anatomical data: Select File/Open... , navigate to the BrainVoyager Data folder(or where ever the files are located), and then select and open sub-10_ses-01_T1w_BRAIN_IIHC.vmr

- Functional data: Select Analysis/Link Volume Time Course (VTC) File... . In the window that opens, click Browse , and then select and open sub-10_ses-01_task-Localizer_run-01_bold_256_sinc3_2x1.0_NATIVEBOX.vtc , and then click OK . The VTC should already be linked to the protocol file. You can verify this by looking at the VTC properties (follow steps in Tutorial 1).

Now we can conduct our voxel-wise correlation analysis.

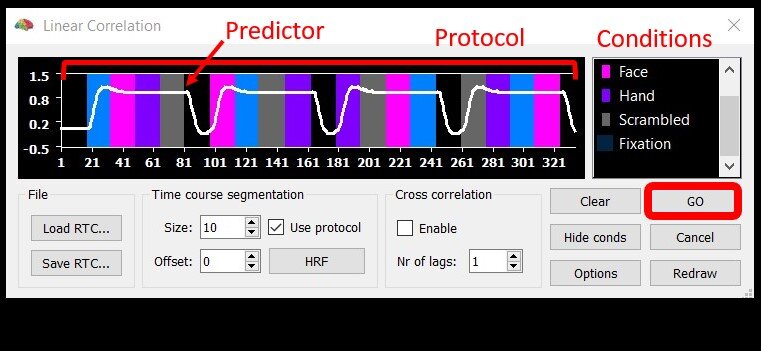

Figure 2-2. Menu to compute Linear Correlation Maps

2) Select Analysis/Compute Linear Correlation Maps...

Now let's generate a box car function with the expected neural response (high for the four main conditions, low for the baseline). If you are using a PC, left-click on any black period and right-click on the blue, pink or purple/green and gray periods. If you are using a Mac, click on any black period and control-click on some part of each of the four colored periods.

Once you have the expected box car function, click on the HRF button to convolve the predictor with the default exercise.

Optional: If you want to see the effect of using a different HRF, you can clear the predictor, regenerate the box car predictor, go into options, select the HRF tab, select Boynton, click OK, and then click HRF. After this, however, repeat these steps using the default two-gamma function.

Click GO.

Figure 2-3. Explanation of how to manually create a box car predictor and convolve it.

Figure 2-4. Shows how the convolved predictor should appear.

Running a voxelwise correlation computes one statistical parameter for every resampled functional voxel (here 194,000 r values). Since we did a correlation, the parameter is r, but as we will see later, other parameters can be estimated (e.g., t for a paired samples contrast, F for an analysis of variance, ANOVA).

Parameters be visualized by generating a statistical "heat map" in which each voxel is assigned a color based on the value of the statistical parameter. (Now you understand what a statistical parametric map is and why a classic fMRI analysis software package is called SPM).

The heat map is generated by assigning a color to each voxel (or leaving it transparent) based upon the level of the parameter. This correspondence is determined by a look-up table (LUT).

Maps are typically overlaid on the anatomical scan to make it easier to see the anatomical location of activation (but remember the map was generated from the functional scans).

If you hover your mouse over different voxels, you will see the correlation coefficent(r) and p value for any given voxel.

Toggle between two different look-up tables that BrainVoyager provides. Select File/Settings..., and in the GUI tab, try out two default options: "New Map Default Colors (v21+)" an "Classic Map Colors (pre-v21)" and click Apply after each selection.

Note: The course slides and tutorials use the Classic colors.

Figure 2-5. Activation depicted with BrainVoyager’s Classic LUT

Figure 2-6. Hovering the mouse over a voxel will show its coordinates, intensity, statistical parameter value and p value.

The mapping between parameter values and the colors in the look-up table (on the right side of the coronal view) can be adjusted. The most important setting is the minimum value or the threshold. Any correlation coefficients closer to zero than the minimum do not get mapped to a color (i.e., the voxels are rendered as transparent). For example, if the threshold is r = .3, they only voxels with r > .3 or r < -.3 will be colored.

There are a few ways to modify the threshold.

3) First, you can adjust the threshold by using the icons (circled in red) in the left side bar. Try it.

Alternatively, if you want to employ a specific threshold, there is a more precise way.

Figure 2-7. The minimum p value (threshold) can be quickly adjusted using these buttons to increase the threshold (bigger blob button) or decrease the threshold (smaller blob button).

4) Select Analysis/Overlay Volume maps... . This will open the Volume Maps window. Next, click on the Statistics tab. Here, we can set the maximum and minimum correlation threshold manually in the Correlation range section. Try changing the min and max values and observe the result on the statistical maps.

Figure 2-8. Many features of the statistical map, including the minimum (threshold) and maximum can be controlled precisely using the Volume Maps control panel.

Question 6:

a) Look at the color scale on the right of the coronal view. What do the numbers on the scale represent? How do the numbers on the scale change when decreasing and increasing the threshold? What do the warm and cool colors represent?

b) Set the threshold to zero and explain what you see. Why are there colored voxels outside the brain? What could we do to limit the colored voxels to the brain volume?

c) Set the minimum threshold to 0.30 and the maximum threshold to 0.80. What occurs when you increase the minimum threshold while keeping the maximum threshold constant? If you keep increasing the minimum threshold, how does your risk of Type I and Type II statistical errors change?

d) Where would you expect significant activation for this contrast? Where would you NOT expect to see legitimate activation for this contrast? Bonus Question: There is one region where you wouldn't expect legitimate activation but you still see very strong activation even with moderately high thresholds. Do you have any idea what might cause this?

e) Subjectively, what threshold do you think gives you "trustworthy" data? Why?

f) What effect does changing the maximum have? What is a good strategy for choosing the maximum value?

Figure 2-9. A specific p value can be specified in Map Options tab of the Volume Maps panel.

5) Now, click on the Map Options tab. Here, we can set a threshold using p values instead of correlation coefficients.

Question 7: Set the threshold to p < .05 and click Apply. Given that a p-value threshold of .05 is the usual statistical standard, why is it suboptimal for voxelwise fMRI analyses?

In the next tutorial, we will consider strategies to deal with this problem.

III. Applying the GLM to Single Voxel Time Courses

Beta Weights, Residuals and the Best Fit

In the first part of the tutorial, we discussed how we can know whether a voxel reliably activates to a certain stimulus. However, that does not inform us on how activated the voxel is. Luckily the GLM can be used to estimate these levels of activation.

The GLM can be used to model data using the following form: y = β0 + β1X1 + β2X2 + ... + βnXn + e where:

- y is the observed or recorded data,

- β0 is a constant or intercept adjustment,

- β1 ... βn are the beta weights or scaling factors

- X1 ... Xn are the independent variables or predictors.

- e is the error (residuals).

In the case of fMRI analyses, you can think of this as modelling a data series (time course) with a combination of predictor time courses, each scaled by beta weights, plus the "junk" time course (residuals) that is left over once you've modelled as much of the variance as you can. These three components of the GLM are illustrated for a simple correlation in the figure below.

Figure 2-10. Here the GLM is used for the simplest possible situation, a correlation.

Using this model, we can try to find the best fit. The best fitting model is found by finding the parameters (beta weights and constant) that maximzes the explained variance(signal), which is also equivalent to minizing the residuals.

Question 8: The formula listed in orange in Figure 10 can be simplified for a correlation. Write the simplified equation and explain what each of the terms corresponds to.

Estimating Beta Weights

In the following animation, adjust the sliders until you think you have optimized the model for Voxel A (i.e., minimized the squared error). Make sure you understand the effect that the four betas and the constant are having on your model. You can test how well you did by clicking the Optimize GLM button, which will find the mathematically ideal solution.

Question 9:

a) How can you tell when you've chosen the best possible beta weight and constant? Consider (1) the similarity of the weighted predicted (purple) and the data (black) in the top panel; (2) the appearance of the residual plot in the bottom panel; and (3) the squared error and sum of residuals?

b) What can you conclude about Voxel A's selectivity for images of faces, hands, bodies and/or scrambled images?

c) Do the same for Voxel's E and F. What can you conclude about their selectivity for images of faces, hands, bodies and/or scrambled images?

d) Define what a beta weight is and what it is not. In other words, what is the difference between a beta weight and a correlation?

What about the baseline?

You might have noticed that our models only have predictors for conditions and none for the baseline, and you might be wondering why that is. To explain this, let's go back to basic agebra.

Imagine you trying to find the value of x in the following equation: 2x + 3 = 5. You might have guessed the answer is x = 1.

Now imagine you are trying to find x for the following equation: 2x + 3y = 5. In this case, there are an inifinite number of solutions, making it hard to know which is the correct one! Adding an extra variable makes the equation unsolvable.

If we use the same number of predictors as there are conditions, we have similar problem with time courses in fMRI analyses. In other words, having an equal number of conditions and predictors makes the model redundant. Let's try it out.

Adjust the sliders until you think you have optimized the model while keeping the constant at 0.

Question 10:

a) What betas did you find? Now compare these to the optimal betas for a single visual predictor. What do you notice?

b) How many possible combinations of betas and constant are possible for the model with baseline and visual predictors? Why is this suboptimal?

IV. Whole-brain Voxelwise GLM in BrainVoyager

In the previous section, we explained how the GLM can be used to model data in a single voxel by finding the best fit (in other words, the optimal beta weights and constant). Now, we can apply this to the whole brain by fitting one GLM for every voxel in the brain.

Conducting a GLM analysis

1) Again, we will remove our previous model (skip this step if already done). Go back to the Browse tab in Volume Maps window, click Delete all, and then Close.

2) Select Analysis/General Linear Model: Single Study. By single study, BrainVoyager means that we will be applying the GLM to a single run.

Click options and make sure that Exclude last condition ("Rest") is checked (the default seems to be that Exclude first condition ("Rest") is checked).

3) Click Define Preds to define the predictors in terms of the PRT file opened earlier and tick the Show All checkbox to visualize them. Notice the shape of the predictors – they are identical to the ones we used earlier for in Question 2. Now click GO to compute the GLM in all the voxels in our functional data set.

Figure 2-11. Defining predictors.

Figure 2-12. This tab on the Single Study GLM dialog allows you to exclude the baseline condition if it is the first or last condition specified.

After the GLM has been fit, you should see a statistical "heat map" . This initial map defaults to showing us statistical differences between the average of all conditions vs. the baseline . More on this later.

In order to create this heat map, BrainVoyager has computed the optimal beta weights at each voxel such that, when multiplied with the predictors, maximal variance in the BOLD signal is explained (under certain assumptions made by the model). Another, equivalent interpretation, is that BrainVoyager is computing beta weights that minimize the residuals. For each voxel, we then ask, “How well does our model of expected activation fit the observed data?” – which we can answer by computing the ratio of explained to unexplained variance.

Figure 2-13. GLM

It is important to understand that, in our example, BrainVoyager is computing four beta weights for each voxel – one for the Face predictor, one for the Hand predictor, one for the Bodies predictor and one for the Scrambled images predictors. And for each voxel, residuals are obtained by computing the difference between the observed signal, and the modelled or predicted signal – which is simply vertically scaled by the beta weights. This is the same as you did manually in Question 2.

But we don't simply want to estimate activation levels for each condition by computing beta weights; we also want to be able to tell if activation levels differ statistically between conditions!

Comparing Activation between Conditions

Just as with statistics on other data (such as behavioral data), we can use the same types of statistics (e.g, t tests, ANOVAs) to understand brain activation differences and the choice of test depends on our experimental design (e.g., use a t test to compare two conditions; use an ANOVA to compare multiple conditions or factorial designs). Statistics provides a way to determine how reliable the differences between conditions are, taking into account how noisy our data are. For fMRI data on single participants, we can estimate how noisy our data are based on the residuals.

For example, we can use a hypothesis test to test whether activation for the Face condition (i.e., the beta weight for Faces) is significantly higher than for Hands, Bodies and Scrambled images (i.e., the beta weight for Hands, Bodies and Scrambled images). Informally, for each voxel we ask, “Was activation for faces significantly higher than activation for Hands, Bodies and Scrambled images?”

To answer this, it is insufficient to consider the beta weights alone. We also need to consider how noisy the data is, as reflected by the residuals. Intuitively, we can expect that the relationship between beta weights is more accurate when the residuals are small.

Question 11: Why can we be more confident about the relationship between beta weights when the residual is small? If the residual were 0 at all time points, what would this say about our model, and about the beta weights? Think about this in terms of the examples in Questions 1 and 2.

We can perform these kind of hypothesis tests using contrasts . Contrasts allow you to specify the relationship between beta weights you want to test. Let's do an example where we wish to look at voxels with significantly difference activation for Faces vs Hands.

4) Select Analysis/Overlay General Linear Model... , then click the box next to Hands until it changes to [-], set Faces to [+] and the others to [].

Note: In BrainVoyager, the Face, Hand, Bodies and Scrambled predictor labels appear black while the Constant predictor label appears gray. This is because we are not usually interested in the value of the Constant predictor so it is something we can call a "predictor of no interest" and it is grayed out to make it less salient. Later, we'll see other predictors like this.

Question 12: In the lecture, we discussed contrast vectors. What is the contrast vector for the contrast you just specified?

Figure 2-14. An example contrast for Faces vs. Hands.

Figure 2-15. The voxel beta plot shows beta weights for a given voxel when you place the cursor over it. Beta weights are relative to the baseline = 0.

Click OK to apply the contrast. Use the mouse to move around in the brain and search for hotspots of activation. By doing this, you are specifying a hypothesis test to test whether the beta weight for Faces is significantly different (and greater than) the beta weight for Hands. This hypothesis test will be applied over all voxels, and the resulting t-statistic and p-value determine the colour intensity in the resulting heat map.

Question 13: How can you interpret the colours in this heat map – does a voxel being coloured in orange-yellow vs. blue-green tell you anything about how much it activates to Faces vs Hands? Can you find any blobs that may correspond to the fusiform face area or the hand-selective region of the lateral occipitotemporal cortex?

Question 14: Imagine you wanted to use contrasts to find voxels that have significantly greater activation to Faces than to Hands, Bodies and Scrambled. What are two possible contrast vectors you could use to find these voxels? Which one is more liberal and which one is stricter?